Why EKS Control Planes Don’t All Crash Together (and Yours Shouldn’t Either)

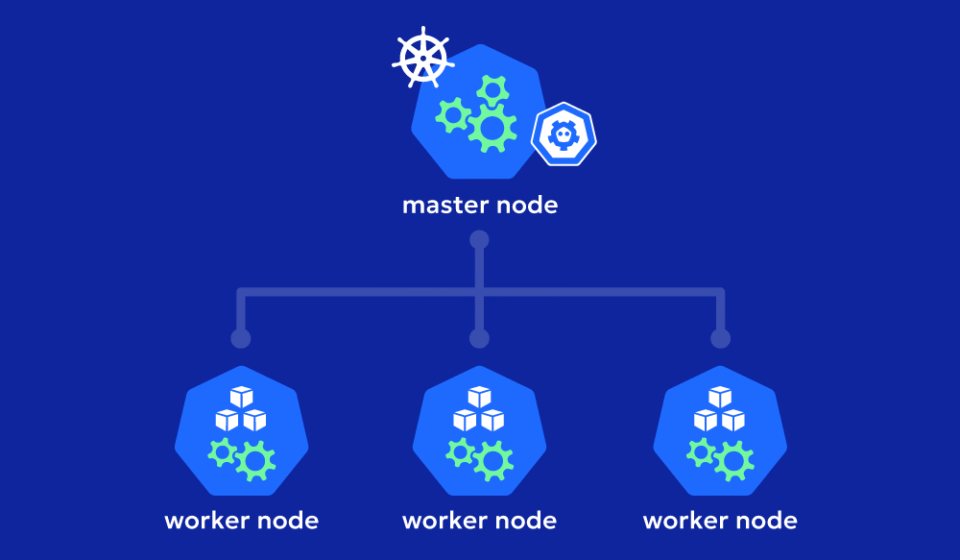

I don’t work for AWS, but I often wonder how they achieve near zero downtime for services like Amazon EKS. AWS manages the control plane while customers manage the worker nodes, yet the EKS architecture is deliberately designed for resilience:

Table Of Content

- The API server nodes run across at least two Availability Zones in every AWS region.

- The etcd server nodes, which store cluster state, are deployed in an auto-scaling group spanning three AZs to ensure durability and fault tolerance.

This means failures in one AZ don’t disrupt the entire cluster which provides an elegant balance of availability and consistency.

Now, when I think about this, I can’t help but wonder:

👉 Does AWS achieve part of this resilience by leveraging concepts similar to AWS Placement Groups?

It wouldn’t be surprising if, under the hood, EKS makes strategic use of Spread Placement Groups to separate critical control-plane components across distinct hardware, while worker nodes might benefit from Cluster Placement Groups for low-latency communication.

This idea brings us to a broader design principle that we, too, can apply in our own systems.

Understanding AWS Placement Groups

AWS provides three types of placement groups, each designed for different workload needs:

- Spread Placement Group – spreads instances across distinct underlying hardware. Best for critical nodes that must not fail together.

- Cluster Placement Group – places instances physically close together for low-latency, high-throughput networking. Ideal for tightly coupled compute workloads.

- Partition Placement Group – separates instances into logical partitions across racks, useful for large-scale distributed systems like Hadoop or Kafka.

For this discussion, we’ll focus on Spread and Cluster groups.

Designing with Spread & Cluster Placement Groups

Imagine you’re building a Kubernetes-like system with critical management/control nodes and worker nodes. You want resilience for the control plane, but also fast, efficient communication for the workers.

🔒 Spread Placement Group for Management Nodes

Your management/control nodes (like EKS’s API servers or etcd) are the brains of the system. If they go down together, your entire platform becomes unavailable.

By placing them in a Spread Placement Group, AWS ensures these nodes are deployed on separate hardware racks, separate failure domains, and potentially across AZs.

- This minimizes correlated failure.

- A single hardware or rack issue won’t take down all your management nodes.

- Perfect for components where availability is greater than performance.

⚡ Cluster Placement Group for Worker Nodes

Worker nodes, on the other hand, often need high-throughput communication with each other. Think of workloads like:

- Machine learning training clusters

- High-performance computing (HPC)

- Real-time analytics engines

Here, the priority isn’t resilience against correlated hardware failure but ultra-low latency networking. A Cluster Placement Group ensures that:

- Worker nodes are placed close together in the same rack.

- They enjoy 10 Gbps+ throughput with minimal latency.

- The trade-off: if that rack fails, all your workers fail together.

And that’s okay — because workers can usually be replaced quickly using tools I like so much like CASTAI and AWS Karpenter, unlike management/control nodes.

Spread vs Cluster at a Glance

| Feature | Spread Placement Group | Cluster Placement Group |

|---|---|---|

| Goal | Maximize availability & minimize correlated failures | Maximize performance & minimize latency |

| How it Works | Instances placed on separate hardware racks | Instances placed close together in same rack |

| Best for | Control plane, critical management nodes | Worker nodes, HPC, ML training, data crunching |

| Failure Impact | A single failure affects only one node | Rack failure may wipe out all nodes together |

| Trade-off | Slightly higher latency, less network performance | Reduced resilience but very high throughput |

Balancing Trade-offs

This design illustrates a key cloud architecture principle:

Not every tier of your system has the same availability vs. performance requirements.

- Control Plane (critical brains) → Use Spread Placement Group for resilience.

- Data Plane (worker muscle) → Use Cluster Placement Group for speed.

By intentionally mixing placement group strategies, you can design a system that is both highly available and highly performant.

Final Thoughts

AWS itself gives us inspiration through services like EKS, which are designed to tolerate failures without impacting customers. While we don’t know the exact implementation details, it’s possible AWS leverages concepts like Spread Placement Groups for resilience and Cluster Placement Groups for throughput under the hood.

By applying the same thinking in your own systems, you can balance durability with speed while designing architectures that gracefully handle failures without sacrificing performance.